Research Interests

Computer Vision and its Applications in Human-Computer Interaction, Augmented Reality and Virtual Reality

Research Projects

| Mobile Animatronics Telepresence System | ||

|

|

Abstract: The mobile telepresence system employs a physical‐virtual avatar for the distant person to provide real‐time face‐to‐face communication with (tangible) physical presence. System includes two parts: a robotic physical‐virtual avatar for the remote person, and an inhabiter station for that person to see and be seen/ captured visually. The physical‐virtual avatar consists of a physical mannequin with a pan and tilt head that is illuminated from inside with live imagery showing the remote human’s face. The mannequin is mounted on a conventional wheelchair for mobility. The physical‐virtual avatar includes three overhead cameras to capture frontal vision with near‐180‐degree coverage. The panoramic video is sent to the inhabiter station. The inhabiter station includes a panoramic array of three displays arranged to coincide with the views of the three cameras mounted on the physical‐virtual avatar. [detail]

Background: This project is part of the BeingThere Centre, a new international research centre for telepresence and telecollaboration established by Nanyang Technological University, ETH Zurich, UNC-Chapel Hill. The collaboration boasts a team of top scientists across three continents, embarking on joint R&D projects to develop next generation telepresence system prototypes for the 21st century. |

|

| TouchEverywhere | ||

|

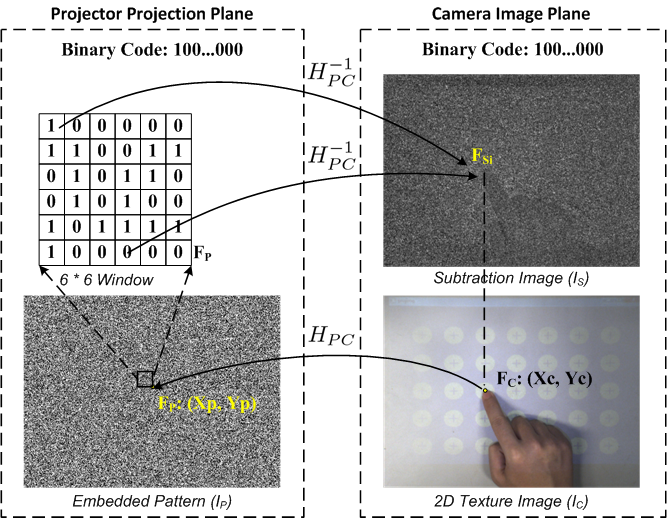

Abstract: We address how an HCl (Human-Computer Interface) with small device size, large display, and touch input facility can be made possible by a mere projector and camera. The realization is through the use of a properly embedded structured light sensing scheme that enables a regular light-colored table surface to serve the dual roles of both a projection screen and a touch-sensitive display surface. A random binary pattern is employed to code structured light in pixel accuracy, which is embedded into the regular projection display in a way that the user perceives only regular display but not the structured pattern hidden in the display. With the projection display on the table surface being imaged by a camera, the observed image data, plus the known projection content, can work together to probe the 3D world immediately above the table surface, like deciding if there is a finger present and if the finger touches the table surface, and if so at what position on the table surface the finger tip makes the contact. All the decisions hinge upon a careful calibration of the projector-camera-table surface system, intelligent segmentation of the hand in the image data, and exploitation of the homography mapping existing between the projector’s display panel and the camera’s image plane. [detail] |

|

| Embedding Imperceptible Patterns into Regular Projection | ||

|

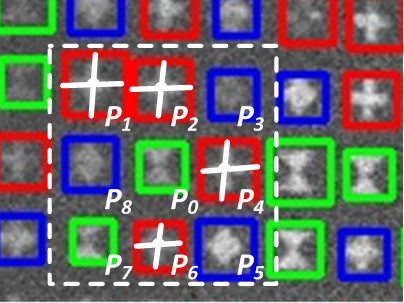

Abstract: We describe an approach of embedding codes into projection display for structured light based sensing, with the purpose of letting projector serve as both a display device and a 3D sensor. The challenge is to make the codes imperceptible to human eyes so as not to disrupt the content of the original projection. There is the temporal resolution limit of human vision that one can exploit, by having a higher than necessary frame rate in the projection and stealing some of frames for code projection. Yet there is still the conflict between imperceptibility of the embedded codes and the robustness of code retrieval that has to be addressed. We introduce noise-tolerant schemes to both the coding and decoding stages. At the coding end, specifically designed primitive shapes and large Hamming distance are employed to enhance tolerance toward noise. At the decoding end, pre-trained primitive shape detectors are used to detect and identify the embedded codes – a task difficult to achieve by segmentation that is used in general structured light methods, for the weakly embedded information is generally interfered by substantial noise. [detail] |

|

| Hand Segmentation in Projector-Camera Systems | ||

|

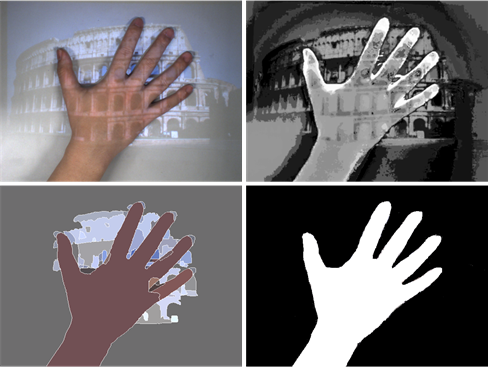

Abstract: One goal of projector-camera system is let human finger be used like a mouse to click and drag objects in the projected content. It requires segmentation of the human palm and fingers in the image data captured by the camera, which is a challenging task in the presence of the incessant variation of the projected video content and the shadow cast by the palm and fingers. We describe a coarse-to-fine hand segmentation method for projector-camera system. After rough segmentation by contrast saliency detection and mean shift-based discontinuity-preserved smoothing, the refined result is confirmed through confidence evaluation. Extensive experimental results are shown to illustrate the accuracy and efficiency of the approach. [detail] |

|

| Head Pose Estimation by Imperceptible Structured Light Sensing | ||

|

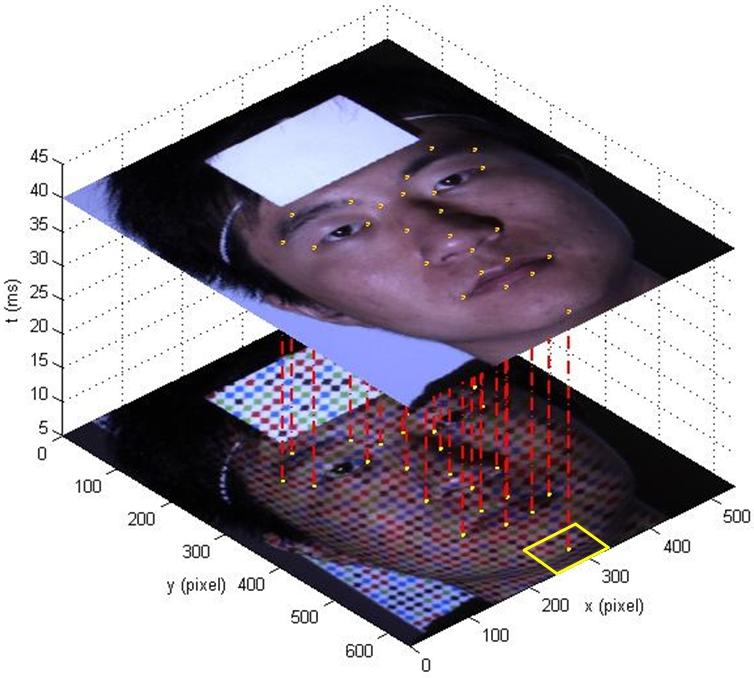

Abstract: We describe a method of estimating head pose estimation from imperceptible structured light sensing. First, through elaborate pattern projection strategy and camera-projector synchronization, pattern-illuminated images and the corresponding scene-texture image are captured with imperceptible patterned illumination. Then, 3D positions of the key facial feature points are derived by a combination of the 2D facial feature points in the scene-texture image localized by AAM and the point cloud generated by structured light sensing. Eventually, the head orientation and translation are estimated by SVD of a correlation matrix that is generated from the 3D corresponding feature point pairs over different frames. [detail] |

|

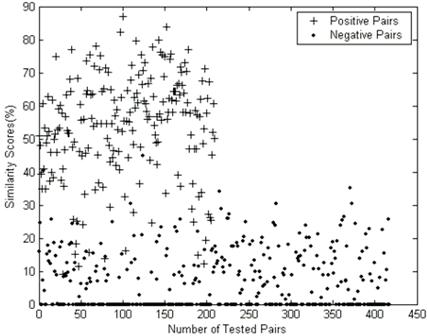

| Face Recognition | |||

|

|

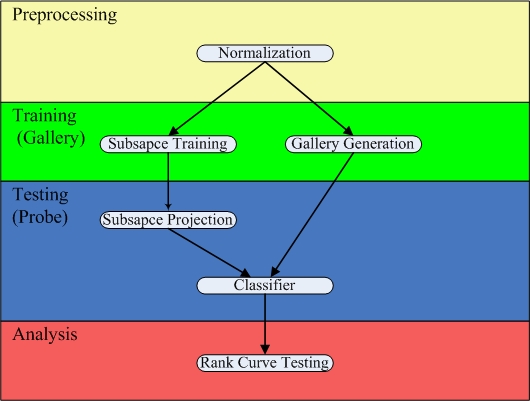

An Open Structure

for Face Recognition Algorithm Evaluation

(OSFRAE) |

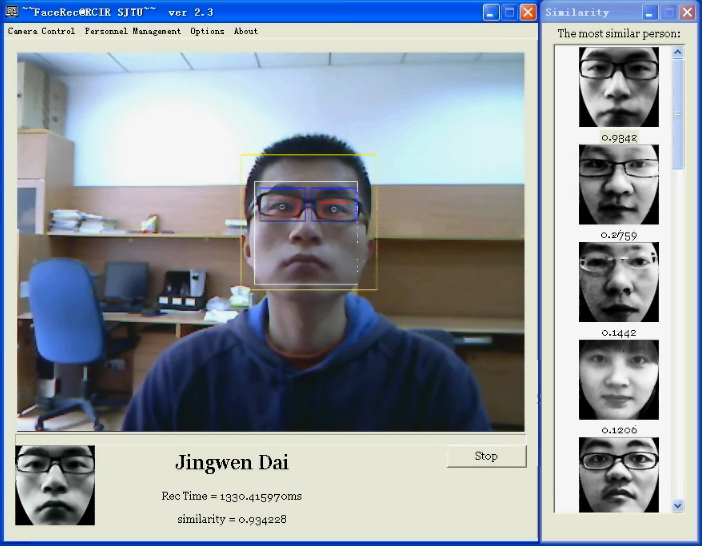

Face

Recognition System for Checking on

Attendance |

|

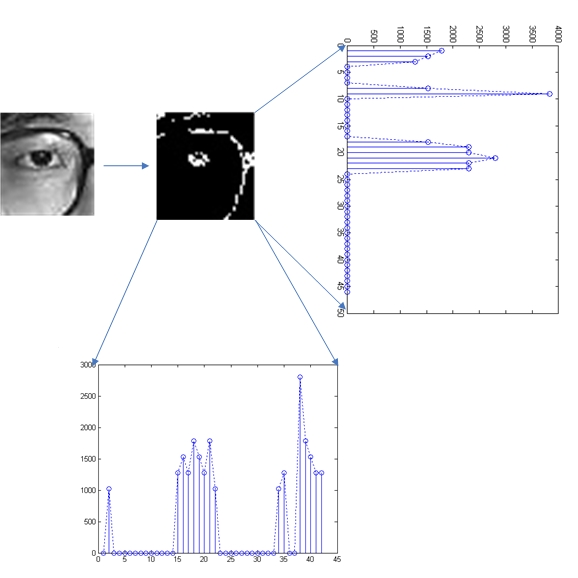

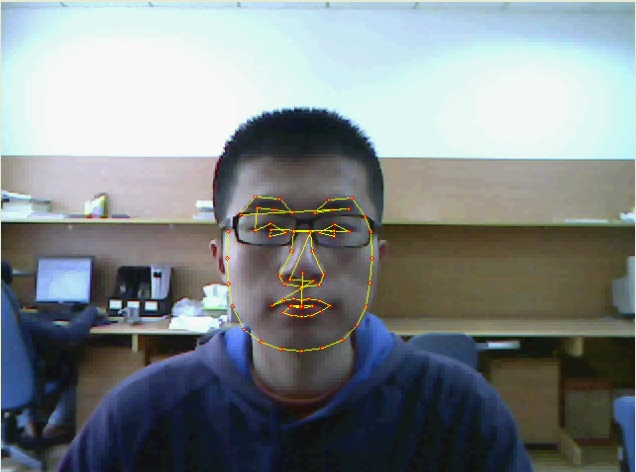

| Facial Landmark Localization | |||

| Eye Localization Method Based on Spectral Residual Model | Facial

Landmark localization by ASM

|

||

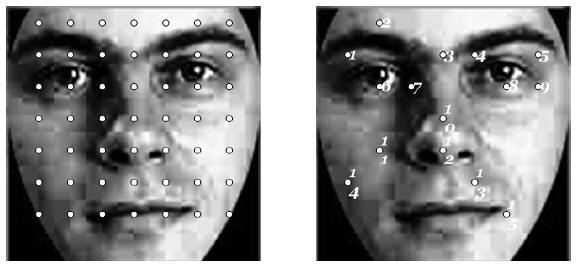

| Facial Feature Selection | |||

|

Gabor Feature Selection through SFFS

|

|||

| Face Detection | |||

|

Multiple Angles Face Detection

|

|||